一. Glusterfs建置

| hostname | ip | glusterfs peer |

|---|---|---|

| k8s-master | 192.168.1.156 | no |

| k8s-node1 | 192.168.1.14 | yes |

| k8s-node2 | 192.168.1.15 | yes |

| k8s-node3 | 192.168.1.7 | yes |

在k8s-node1,k8s-node2,k8s-node3 從ESXI上額外在掛一顆硬碟(不需格式化及掛載)

#Brick Server 三個節點需要保持時鐘同步

# yum install chrony -y

# egrep -v "^$|^#" /etc/chrony.conf

server 172.20.0.252 iburst

driftfile /var/lib/chrony/ drift

makestep 1.0 3

實時同步

logdir / var / log / chrony

#設置開機啟動,並重啟

# systemctl enable chronyd.service

# systemctl restart chronyd.service

#查看狀態

# systemctl status chronyd.service

# chronyc sources -v

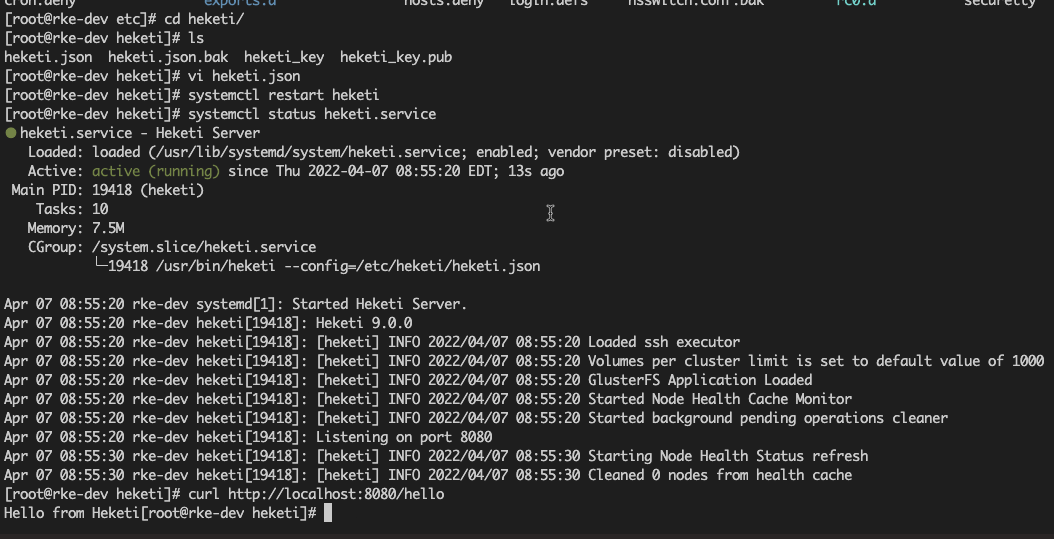

{

"_port_comment": "Heketi Server Port Number",

"port": "8080",

"_use_auth": "Enable JWT authorization. Please enable for deployment",

"use_auth": false,

"_jwt": "Private keys for access",

"jwt": {

"_admin": "Admin has access to all APIs",

"admin": {

"key": "admin@123"

},

"_user": "User only has access to /volumes endpoint",

"user": {

"key": "admin@123"

}

},

"_glusterfs_comment": "GlusterFS Configuration",

"glusterfs": {

"_executor_comment": [

"Execute plugin. Possible choices: mock, ssh",

"mock: This setting is used for testing and development.",

" It will not send commands to any node.",

"ssh: This setting will notify Heketi to ssh to the nodes.",

" It will need the values in sshexec to be configured.",

"kubernetes: Communicate with GlusterFS containers over",

" Kubernetes exec api."

],

"executor": "ssh",

"_sshexec_comment": "SSH username and private key file information",

"sshexec": {

"keyfile": "/etc/heketi/heketi_key",

"user": "root",

"port": "22",

"fstab": "/etc/fstab"

},

"_kubeexec_comment": "Kubernetes configuration",

"kubeexec": {

"host" :"https://kubernetes.host:8443",

"cert" : "/path/to/crt.file",

"insecure": false,

"user": "kubernetes username",

"password": "password for kubernetes user",

"namespace": "OpenShift project or Kubernetes namespace",

"fstab": "Optional: Specify fstab file on node. Default is /etc/fstab"

},

"_db_comment": "Database file name",

"db": "/var/lib/heketi/heketi.db",

"_loglevel_comment": [

"Set log level. Choices are:",

" none, critical, error, warning, info, debug",

"Default is warning"

],

"loglevel" : "debug"

}

}

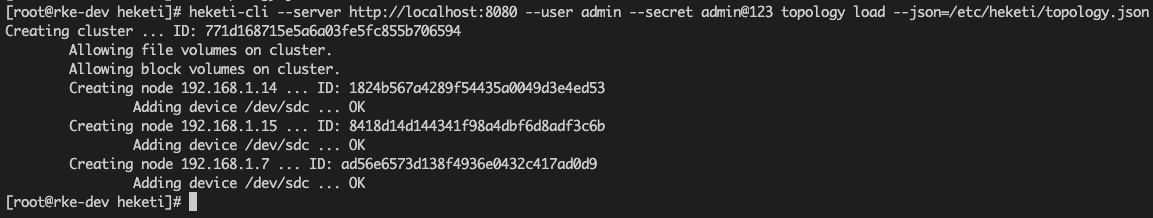

{

"clusters": [

{

"nodes": [

{

"node": {

"hostnames": {

"manage": [

"192.168.1.14"

],

"storage": [

"192.168.1.14"

]

},

"zone": 1

},

"devices": [

"/dev/sdc"

]

},

{

"node": {

"hostnames": {

"manage": [

"192.168.1.15"

],

"storage": [

"192.168.1.15"

]

},

"zone": 1

},

"devices": [

"/dev/sdc"

]

},

{

"node": {

"hostnames": {

"manage": [

"192.168.1.7"

],

"storage": [

"192.168.1.7"

]

},

"zone": 1

},

"devices": [

"/dev/sdc"

]

}

]

}

]

}

apiVersion: v1 kind: Secret metadata: name: heketi-secret namespace: default data: # base64 encoded password. Eg: echo -n "mypassword" | base64 key: YWRtaW5AMTIz type: kubernetes.io/glusterfs

apiVersion: storage.k8s.io/v1 kind: StorageClass metadata: name: gluster-heketi-storageclass provisioner: kubernetes.io/glusterfs reclaimPolicy: Delete parameters: resturl: "http://192.168.1.156:8080" restauthenabled: "true" restuser: "admin" secretNamespace: "default" secretName: "heketi-secret" volumetype: "replicate:2" allowVolumeExpansion: true

Name: gluster-heketi-storageclass

IsDefaultClass: No

Annotations: kubectl.kubernetes.io/last-applied-configuration={"allowVolumeExpansion":true,"apiVersion":"storage.k8s.io/v1","kind":"StorageClass","metadata":{"annotations":{},"name":"gluster-heketi-storageclass"},"parameters":{"restauthenabled":"true","resturl":"http://192.168.1.156:8080","restuser":"admin","secretName":"heketi-secret","secretNamespace":"default","volumetype":"replicate:2"},"provisioner":"kubernetes.io/glusterfs","reclaimPolicy":"Delete"}

Provisioner: kubernetes.io/glusterfs

Parameters: restauthenabled=true,resturl=http://192.168.1.156:8080,restuser=admin,secretName=heketi-secret,secretNamespace=default,volumetype=replicate:2

AllowVolumeExpansion: True

MountOptions: <none>

ReclaimPolicy: Delete

VolumeBindingMode: Immediate

Events: <none>

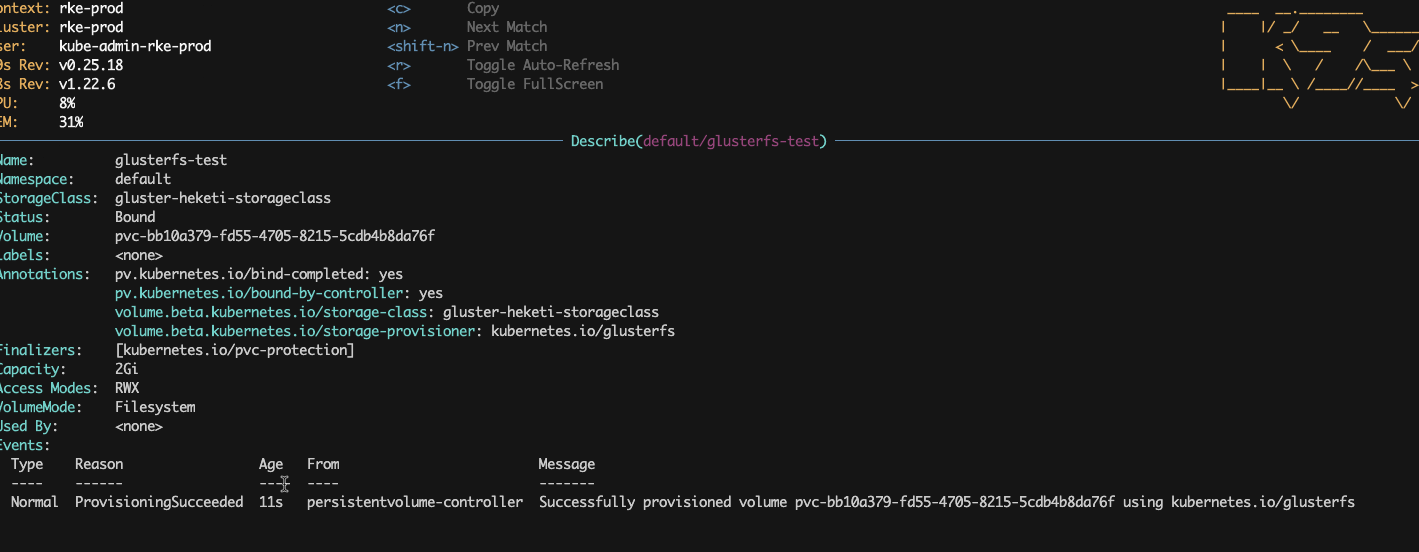

kind: PersistentVolumeClaim

apiVersion: v1

metadata:

name: glusterfs-test

namespace: default

annotations:

volume.beta.kubernetes.io/storage-class: "gluster-heketi-storageclass"

spec:

accessModes:

- ReadWriteMany

resources:

requests:

storage: 2Gi